Numo AI Document Assistant is a comprehensive AI-powered platform that combines document management, intelligent chat capabilities, and advanced retrieval-augmented generation (RAG) technology. The platform enables users to upload various document types, process web content, and engage in intelligent conversations with context-aware AI assistance.

KEY FEATURES

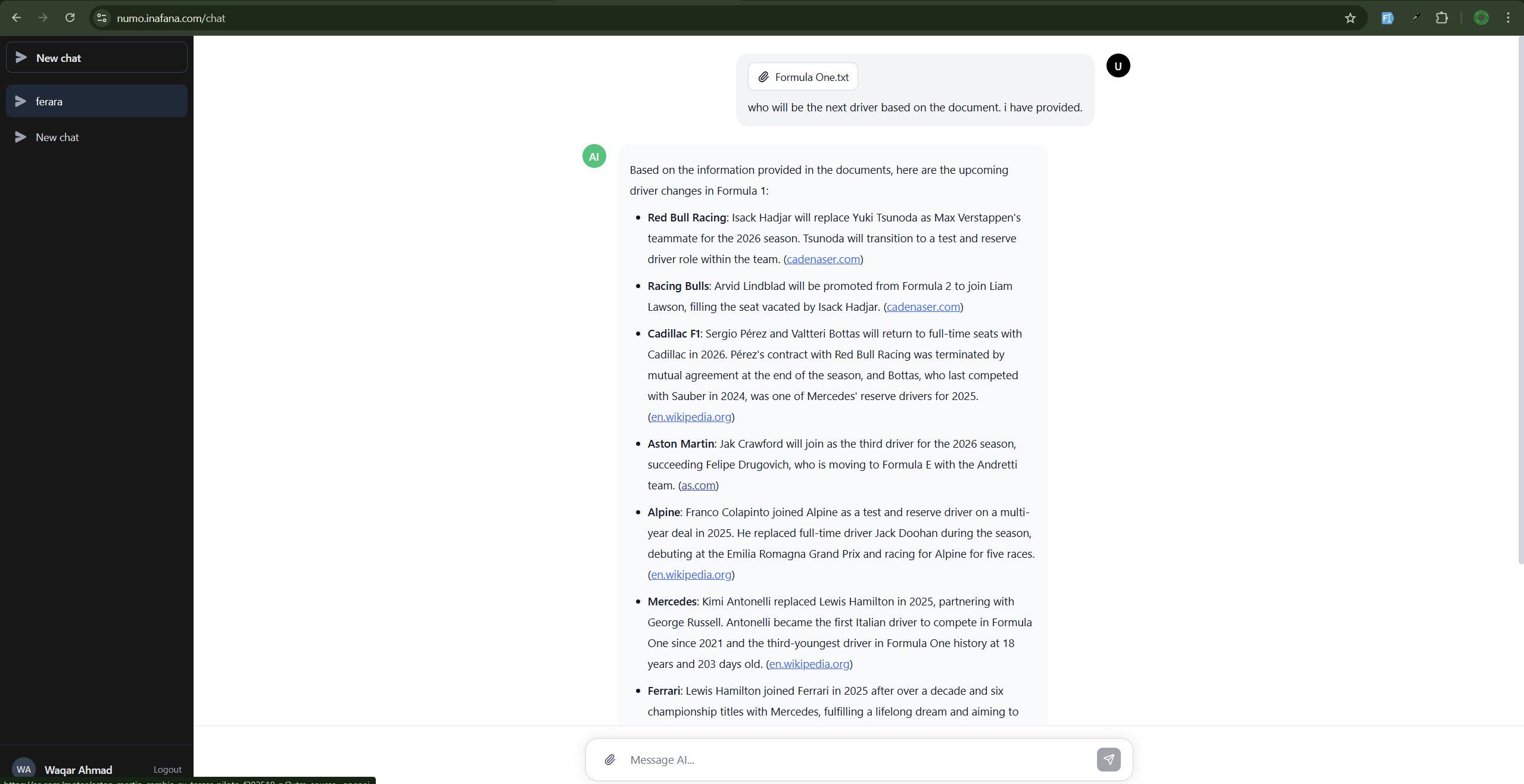

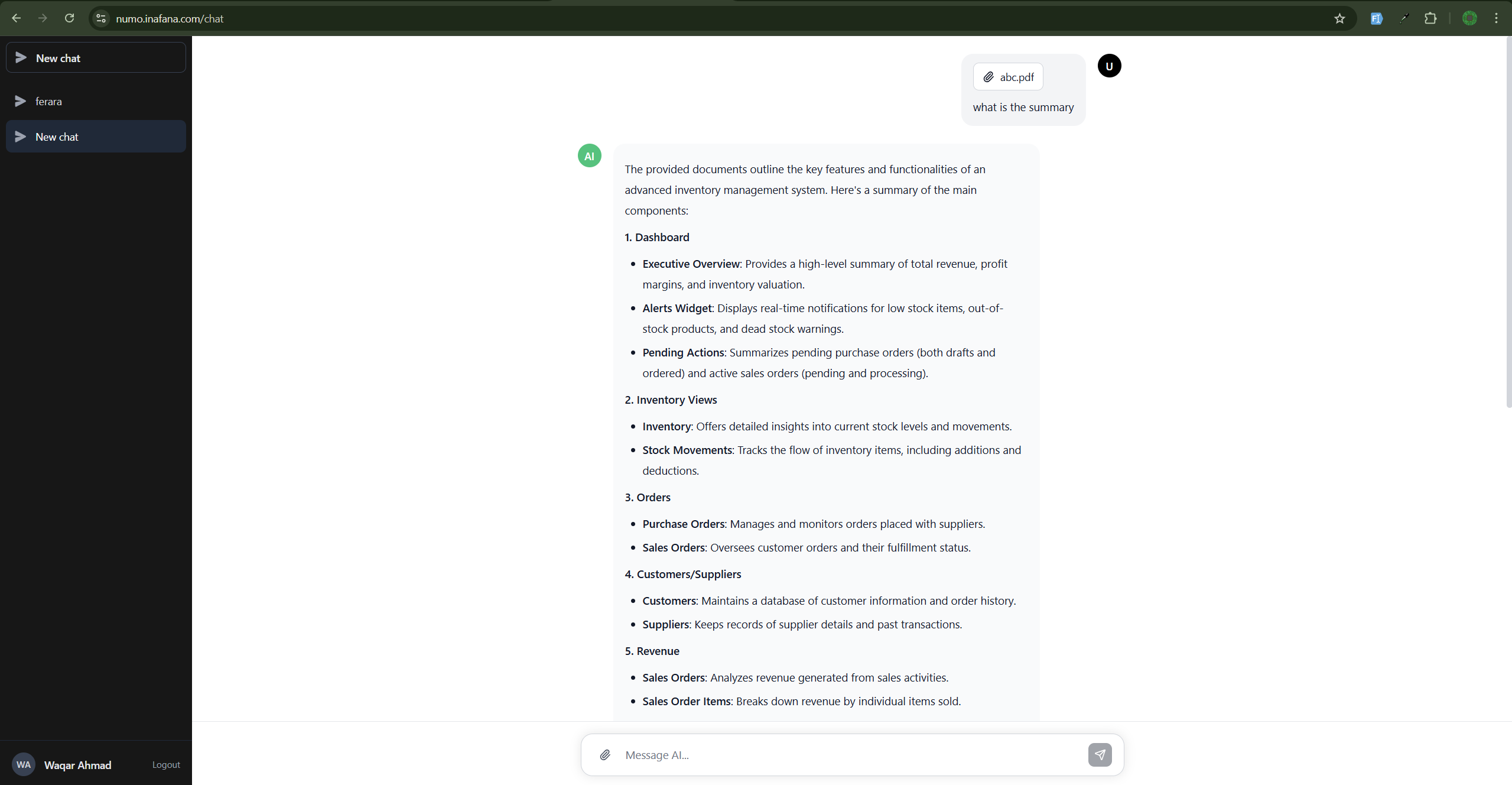

- RAG-Powered Chat Interface - Advanced AI chat system that uses retrieval-augmented generation to provide contextually relevant responses based on uploaded documents

- Document Processing & Embeddings - Automatic text extraction, chunking, and vector embedding generation for uploaded PDFs and text files

- Web Content Scraping - Intelligent web scraping functionality that extracts and processes content from URLs for knowledge base integration

- Vector Similarity Search - PostgreSQL with pgvector extension for efficient semantic search across document embeddings

- Real-Time Streaming Responses - Server-sent events (SSE) for real-time AI response streaming

- Multi-Document Chat Groups - Organize conversations and documents into chat groups for better context management

- Admin Dashboard - Comprehensive admin interface for user management, activity logs, and system monitoring

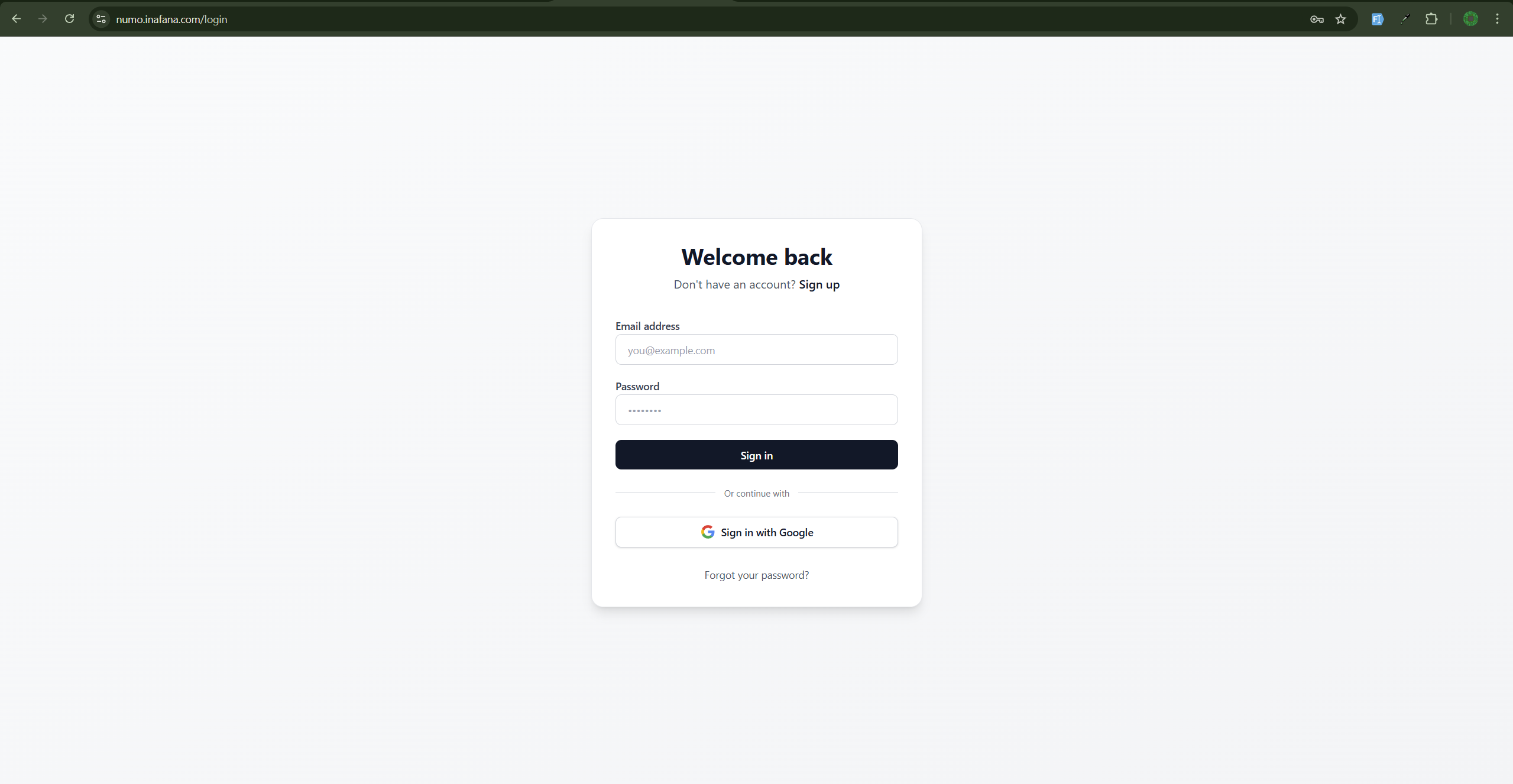

- Secure Authentication - JWT-based authentication with Google OAuth integration

TECHNICAL ARCHITECTURE

The platform is built with a modern full-stack architecture featuring a React frontend with Redux Toolkit for state management, and a Node.js/Express backend with PostgreSQL database. The AI capabilities are powered by OpenAI's GPT-4o model for responses and text-embedding-3-small for generating vector embeddings. LangChain is used for document chunking and text processing, while pgvector enables efficient similarity search across document embeddings.

DOCUMENT PROCESSING PIPELINE

- Users upload documents (PDFs, text files) or provide URLs for web scraping

- Documents are processed to extract text content

- Text is chunked using LangChain with configurable chunk size (512 tokens) and overlap (100 tokens)

- Each chunk is converted to vector embeddings using OpenAI's embedding model

- Embeddings are stored in PostgreSQL with pgvector extension for efficient similarity search

- During chat interactions, user queries are embedded and matched against stored document chunks

- Relevant context is retrieved and injected into AI prompts for contextually accurate responses

The platform also includes advanced features like PDF generation using Puppeteer, image processing capabilities, email notifications, and comprehensive error handling with rate limiting and security measures.